Game engines often have some form of metadata system that can be used for a myriad of tasks. My little home brew engine, for example, uses metadata to facilitate serialization, allow object allocation by name, content-updating, etc. It’s all quite common, but creating such a system is actually pretty complex when you start to get into the nitty gritty implementation details. Your metadata design choices very quickly start to inform many other areas of your engine design.

Various engines create and store their metadata in various ways. Unreal Engine 3, for example, uses UnrealScript to describe game logic as well as provide the source for the engine metadata. Their UC compiler creates C++ headers which are compiled into the game binary while the metadata is shunted over to the .u script packages. I’ve never liked this scheme for a couple reasons. Perhaps chief among them is that it requires programmers use one language to write their type definitions and then use a different language for their code their code. In other words, they have to author their C++ header files by proxy. There are a few other problems with that system, but it’s not helpful to dwell on it.

A Little Context to Start

My hobby engine uses a system where any class or struct can have metadata, but that’s not strictly required. Additionally, each metadata-enabled type is not required to have a virtual function table. Lets consider a simple class sitting in a header file called ChildType.h:

ChildType.h

class childType : public parentType

{

DECLARE_TYPE( childType, parentType );

void RedactedFunction( float secretSauce );

float unsavedValue; /// +NotSaved

double * ptrToDbl;

ValueType_t someValues[3];

};

By virtue of using the DECLARE_TYPE macro, it is clear this type is intended to be metadata-enabled, but it’s not obvious where that data comes from. We need to express all the critical information about the class in such a way that we can serialize it or generically inspect it. The hows and whys of my design choices aren’t important, but my solution is to define a new cpp file that looks like this:

ChildTypeClass.cpp

MemberMetadata const childType::TypeMemberInfo[] = {

{“unsavedValue”, eDT_Float, eDT_None, eDF_NotSaved, offsetof(childType, unsavedValue), 1, NULL },

{“ptrToDbl”, eDT_Pointer, eDT_Float64, eDF_None, offsetof(childType, ptrToDbl), 1, NULL },

{“someValues”, eDT_StaticArray, eDT_Struct, eDF_None, offsetof(childType, someValues), 3, ValueStructClass }

};

IMPLEMENT_TYPE( childType, parentType );

There’s a lot going on here, and there is a lot of unimportant plumbing hidden behind those DECLARE_TYPE and IMPLEMENT_TYPE macros. For this discussion, the DECLARE_TYPE macro adds a class-static array of MemberMetadata structures. Each member of that array specifies a name, primary type, secondary type, flags (like +NotSaved), offsetof the member inside the structure, static array length (usually 1), and target metadata type (or NULL).

That’s a lot of data to type in correctly and maintain over the life of an engine. I knew very early on that while manual typing was ok to bootstrap the project, automation would have to enter the picture eventually.

Automation Enters the Picture

As I said, my design choices aren’t the focus of this article – perhaps another time. This article is about how I’m going about creating my metadata.

I very briefly considered writing a C++ parserBAHAHAHAHA! Oh, man…that’s rich! But seriously, compiler grammars are one of those weird things that amuse me, and I actually considered writing just enough of a “loose” C++ parser to pull out the information I wanted. When I stopped to think about the magnitude of language features and weird cases in C++, however, reality came crashing in and it’s easy to see why I abandoned the idea.

Enter Clang

Clang is a C language family front-end for LLVM. Clang is free (BSD), and Clang is awesome! There are a few layers to the onion, but at a high level, Clang is a C++ to LLVM compiler. When used in tandem with LLVM, it’s a complete optimizing compiler ecosystem. More importantly for our purposes, however, libClang provides a relatively simple C-based API into the abstract syntax tree (AST) created by the language parser. That’s HUGE for creating metadata. Seriously, it’s almost all of the heavy lifting in something like this. Making matters even easier, there are Python bindings if that’s your thing. The Python bindings are probably a little easier to work with due to the fast iteration times and cleaner string handling.

A Little Glossary Action

The libClang API uses a handful of simple concepts to model your code in AST form.

Translation Unit – In practical terms, it’s one run of the compiler that creates one AST. We can think of it as one compiled file + any files it includes. In terms of libClang, it’s the base-level container and the jumping-off point for the data-gathering work we’ll need to do.

Cursor – A cursor represents one syntactic construct in the AST. They can represent an entire namespace or a simple variable name. They also maintain parent/child relationships with other cursors. For our purposes, the cursors are the nodes in the AST we need to traverse. They also contain references to the source file position where they were found.

Type – This one is easy. The cursors we’re looking for will often reference a type. These are literally the language types. Keep in mind that Clang models the entire type system, so typedefs are different from the types they alias. We’ll get into that.

The Plan

Once the parsing is taken care of, the solution is pretty straight forward.

- Setup the Environment

- For each header, make a Translation Unit.

- Traverse the AST for interesting type definition cursors.

- For each type definition, look for member data cursors and other information.

- Once we have all the information we need about a type, dump text to a file.

Setup – Compiler Environment

Obviously, we need one or more header files to work on, but there’s more – more than I naively anticipated anyway. Even if we just hardcode a list of headers, we won’t be able to compile them by themselves. We need to replicate enough of your normal project environment get the same parsing result that your normal compiler would create. We need to use the same command line preprocessor defines (-D’s) as well as the same additional include folders (-I’s).

I’m going to leave that as an exercise for the reader, but my solution involved parsing my Visual Studio project files. It wasn’t a huge deal, but this is where project structure will play a decently sized role, and a well-structured project will be easier to configure.

Setup – Header Environment

Another thing I hadn’t considered is what sort of environment a header lives in. There’s no way to know what files need to be included prior to the inclusion of a given header. There are really only two things you can assume when dealing with this problem – Assume any pre-compiled headers are included before your header and assume that nothing else needs to be included because your header can stand on its own. That second point jibes with how I generally structure my headers anyway, but it is graduated to a hard requirement in this case. Header cascades can kill your compile time performance, but external header order dependencies are worse. Obviously, it’s a good idea to mitigate header cascades with forward declarations where possible.

Time to Make the Doughnuts

Once we’ve gathered all our include paths and preprocessor symbols, invoking clang is pretty easy. We can’t just pass the header to clang_parseTranslationUnit and call it done. I suppose we can, but “.h” is an ambiguous extension. Clang won’t know how to act without some additional arguments to indicate the language to use. I also needed to include my PCH file anyway, so I ended up creating an ephemeral .cpp file to kill two birds with one stone. Conveniently, Clang has support for in-memory or “unsaved” files. Blatting a few #include strings into a buffer is all it takes. Here is the basic setup for building a translation unit for a single header called “MyEngineHeader.h”. Obviously, your environment arguments will be a bit different.

Build a Translation Unit

char const * args[] = {"-Wmicrosoft"

, "-Wunknown-pragmas"

, "-I\\MyEngine\\Src"

, "-I\\MyEngine\\Src\\Core"

, "-D_DEBUG=1" };

CXUnsavedFile dummyFile;

dummyFile.Filename = "dummy.cpp";

dummyFile.Contents = "#include \"MyEnginePCH.h\"\n#include \"MyEngineHeader.h\"";

dummyFile.Length = strlen( dummyFile.Contents );

CXIndex CIdx = clang_createIndex(1, 1);

CXTranslationUnit tu = clang_parseTranslationUnit( CIdx, "dummy.cpp"

, args, ARRAY_SIZE(args)

, &dummyFile, 1

, CXTranslationUnit_None );

Build Errors? WTF?!

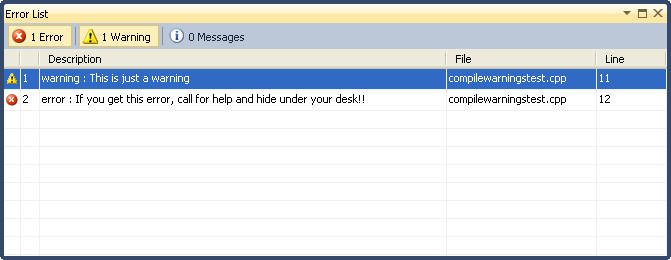

Compiling your code with Clang will probably output some unexpected errors. Remember that Clang is a C++ compiler with nuances like any other, and Clang’s nuances won’t necessarily match your other C++ compilers’ nuances. This is a good thing – seriously! Anyone who’s done cross-platform work will tell you that with every additional platform, long-standing bugs show themselves. Embracing a multi-compiler situation will force you to keep cleaner, more standards-compliant code.

Unfortunately, resolving the major problems is not optional here. We need to create a valid AST for traversal that isn’t missing any attributes that describe our data. In order to get what we want, the parser actually has to finish what it’s doing. Use clang_getDiagnostic, clang_getDiagnosticSpelling, etc. to get human-readable error messages.

Diagnostics Dump

unsigned int numDiagnostics = clang_getNumDiagnostics( tu );

for ( unsigned int iDiagIdx=0; iDiagIdx < numDiagnostics; ++iDiagIdx )

{

CXDiagnostic diagnostic = clang_getDiagnostic( tu, iDiagIdx );

CXString diagCategory = clang_getDiagnosticCategoryText( diag );

CXString diagText = clang_getDiagnosticSpelling( diag );

CXDiagnosticSeverity severity = clang_getDiagnosticSeverity( diag );

printf( "Diagnostic[%d] - %s(%d)- %s\n"

, iDiagIdx

, clang_getCString( diagCategory )

, severity

, clang_getCString( diagText ) );

clang_disposeString( diagText );

clang_disposeString( diagCategory );

clang_disposeDiagnostic( diagnostic );

}

Time to Start Digging!

The compile step should have provided you with a valid translation unit. We need to keep that around, but we’re not going to do much with it once we’ve checked for errors. Once we get the top-level cursor with clang_getTranslationUnitCursor(), we’ll put the translation unit in a safe place and use the cursor as the top-level object from then on.

We want to find relevant types, but we have to be smart about it. The C-language Clang interface uses a clunky callback API called clang_visitChildren. (Note: Python provides a simpler non-recursive getChildren interface that returns an iterator.) Clang will call your callback for each child cursor it encounters. Your callback, in turn, returns a value indicating whether the iteration should recurse to deeper children, continue to this child’s siblings, or quit entirely.

We’re only interested in type declarations at this stage, but C++ allows new types to appear in several places. Fortunately, we can pare down the file pretty quickly.

| Item |

Cursor Kind |

Recurse? |

Remember? |

| Typedef |

CXCursor_TypedefDecl |

Yes |

No |

| Class Decl |

CXCursor_ClassDecl |

Yes |

Yes |

| Struct Decl |

CXCursor_StructDecl |

Yes |

Yes |

| Namespace Decl |

CXCursor_Namespace |

Yes |

No |

| Enumerations |

CXCursor_EnumDecl |

No |

Yes? |

| Anything Else |

??? |

No |

No |

It should be fairly obvious for an experienced programmer what to look for – the table above is the rule-set I’ve been using. There are a few other cases that aren’t covered – function-private types, and unions. It’d be easy enough to deal with these cases too, but I haven’t had a need to serialize a union just yet, and function-private types have limited utility for serialization.

Traverse For Types

MyTraversalContext typeTrav;

clang_visitChildren( clang_getTranslationUnitCursor( tu ), GatherTypesCB, &typeTrav );

enum CXChildVisitResult GatherTypesCB( CXCursor cursor, CXCursor parent, CXClientData client_data )

{

MyTraversalContext * typeTrav = reinterpret_cast( client_data );

CXCursorKind kind = clang_getCursorKind( cursor );

CXChildVisitResult result = CXChildVisit_Continue;

switch( kind )

{

case CXCursor_EnumConstantDecl:

typeTrav->AddEnumCursor( cursor );

break;

case CXCursor_StructDecl:

case CXCursor_ClassDecl:

typeTrav->AddNewTypeCursor( cursor );

result = CXChildVisit_Recurse;

break;

case CXCursor_TypedefDecl:

case CXCursor_Namespace:

result = CXChildVisit_Recurse;

break;

}

return result;

}

Enumerations are in the mix even though they’re a bit of a special case. For metadata purposes, you might get away with just treating them as integers. You can, however, be more robust in the face of changing types if you store them as symbolic strings until you do the final bake of your data.

Panning For Gold

Now that we have a bunch of types, we might want to filter them. Remember that we might have encountered a massive header cascade in the compilation step. Logically, we’re only interested in types that were declared in the header we’re directly processing. We’ll get the other ones when we process their headers in turn. Fortunately, we can iterate the list of interesting type cursors we just created in the last step, and ask each one what file location it came from. Types from other headers can be safely culled.

Data Gathering – Internal

After culling types from other headers, we should have a much smaller list of interesting types, so we can use their cursors as starting points to learn more about them. This is where we start gathering all that data I mentioned earlier. We’ll iterate for this data in much the same way we got the type cursors in the first place. I’m sure you can do them both in one sweep, but I find the problem domain a little easier to think about in two-phases. This time, instead of starting at the top of the translation unit, we can start the iteration at the type cursor. We want to iterate the type declaration cursor completely and look for a few different things.

| Item |

Cursor Kind |

Recurse? |

Remember? |

| Base Type Ref |

CXCursor_CXXBaseSpecifier |

No |

Yes |

| Member Var |

CXCursor_FieldDecl |

Not yet(*) |

Yes |

| Static Class Var |

CXCursor_VarDecl |

No |

No |

| Methods |

CXCursor_CXXMethod |

No |

??? |

*

See examples below

Base types and member variables should be fairly obvious as to why we want them, but static class variables might seem odd for this list. I use them for another level of filtering. I know that any class that supports metadata uses that DECLARE_TYPE macro from earlier. Of course, macros are all resolved by the preprocessor, so the C-language parser never sees that symbol, but buried within is a single static class variable with a known name and type that I can find. If it’s not there, then this class is incapable of supporting metadata, and I can just skip it entirely. Looking at the problem the other way, the only thing I need to do in order to enable metadata for a given class is add the DECLARE macro. The rest takes a care of itself.

As an aside, I haven’t bothered adding any method-invoke plumbing to my engine, but it’s fairly easy to see how one might suss that out of the data. Lots of engines do that sort of thing, so I won’t be surprised if I end up digging into it eventually.

Data Gathering – External

Once we have all the data we’ll need from inside the class, we only need a few external tidbits before we can generate the metadata file. We need to know the fully-qualified namespace of the target type as well as those of all the base types. This, too, is pretty straight forward though, as you can simply ask any cursor what its lexical parent happens to be. By iterating until you hit the translation unit, you can capture all the containing scopes of a given type. There is an important caveat that bit me only after a good while. Consider this code:

Ambiguity Operator

namespace FooNS

{

class Foo

{

int dataFoo;

};

}

using namespace FooNS;

typedef Foo FooAlias;

class Bar : public FooAlias

{

int dataBar;

};

When we try to find the full scope of the base class of Bar, we won’t be aware that Foo actually lives inside of FooNS, and the using directive is what allows this to work. I am not a fan of using and often refer to it as the ambiguity operator. I suppose it happens often enough in production code, however, that we should deal with it correctly.

The way to deal with this situation is to walk the lexical parent chain as I already mentioned, but at every step along the way, we need to see if the parent scope is a class-type, struct-type, or typedef. If it is any of those, then we need to get the type record from the parent cursor, then ask for the canonical type record in order to eliminate the typedef indirections, and finally get the cursor from the type record using the clang_getTypeDeclaration function. This might seem needlessly complex, but consider gathering data from Bar in the above code example. Walking the lexical parents of Bar works as expected because it doesn’t quietly live inside of any scopes that aren’t obvious. Doing the same for the base class (FooAlias) is a different story. In that case, the base class is actually a typedef of Foo which is quietly defined inside of the FooNS namespace.

Data Gathering – Off-World

We’ve already dug into the class as well as ascended the various scopes in which a class might live. With all that accounted for, what else could there be to gather? Way back in the first section where I said my data members can have flags such as “+NotSaved”, I never really said how that information was found. Unfortunately, C++ doesn’t really provide a code-annotaion scheme that integrates with the mechanics of the parser. There’s a little room for a #pragma or an __attribute__ interface, but I was unable to make those systems work how I wanted. Additionally, I don’t really like how cumbersome they would have to be in order to give per-member-data attribute granularity. Instead I simply opted to use code comments. That way, the regular code would be completely unaware of them, and I would have free reign to implement any metadata features I wanted. Obviously, this is where we step outside of the AST that has served us so well up to this point, but really very far outside. We already have a cursor for each data member, and we can use clang_getCursorExtent get the exact positions in the source files where this cursor occurs. From there, it becomes fairly trivial to do localized scan for comments using any syntax you happen to want.

Writing it out

Ok, finally we should have all the data we need to write out our metadata. As I said earlier, I write everything out to a .cpp file for inclusion into the engine project. That’s a nice, human-readable method, but it has an implicit requirement that any metadata generation run might require a small additional build. In Visual Studio, that can also mean you have to reload the project if you’ve added any new classes. On the bright side, all your metadata is there at engine startup without load-order or chicken and egg problems.

There are other ways, however. For example, you could dump all this information out to a binary archive that is slurped into the engine on startup. It could also be demand-loaded and unloaded if the overhead starts to be an issue.

Details Details…

Now that you’re all sleeping soundly after traversing the AST, I thought I’d give a few examples of how the AST is structured for common cases.

Let’s briefly consider some normal member data:

class Normal

{

int data1;

float data2;

};

The cursor hierarchy looks like this:

| Cursor Text | Cursor Kind | Type Kind |

|---|

| Translation Unit | | |

| Normal | CXCursor_ClassDecl | CXType_Record |

| data1 | CXCursor_FieldDecl | CXType_Int |

| data2 | CXCursor_FieldDecl | CXType_Float |

It’s actually pretty intuitive once you’re comfortable with some basic compiler concepts. Types are separated from semantic constructs, and POD types are directly represented in the Clang API.

Now consider only slightly more complexity:

class StillPrettyNormal

{

int * dataPtr1;

struct DataType * dataPtr2;

};

| Cursor Text | Cursor Kind | Type Kind | Pointee Type |

|---|

| Translation Unit | | |

| StillPrettyNormal | CXCursor_ClassDecl | CXType_Record | |

| dataPtr1 | CXCursor_FieldDecl | CXType_Pointer | CXType_Int |

| DataType | CXCursor_StructDecl | CXType_Record | |

| dataPtr2 | CXCursor_FieldDecl | CXType_Pointer | CXType_Record(*) |

*

Causes ref to DataTypeClass

There are two odd parts here. First, we’ve lost the notion of ‘integer’ for dataPtr1 – we’ve only been told that it’s some sort of pointer. This isn’t really a problem though, because Clang provides the clang_getPointeeType function. You can call this on any pointer type to get the next type in the chain. Pointers to pointers to pointers can be resolved this way through multiple calls if need be.

Second, we have an unexpected struct declaration in the middle of our class declaration. Well, it’s not completely unexpected, actually. The inclusion of ‘struct’ in the field declaration of dataPtr2 is also a forward declaration for the type, and Clang represents this. Fortunately, it’s a sibling of the actual field declarations and we can safely ignore it.

The final part of this example is the addition of the type reference to the externally-defined DataType. Forward declarations in C++ allow types to be mentioned and not fully defined until they are used so for now, I have to assume that DataTypeClass actually exists somewhere else. The possibility exists, however, that DataType is not a metadata-enabled class, and the reference to DataTypeClass will break at link-time. Obviously, this needs to be tightened down in my engine, and ideally, I’d like to avoid some sort of comment-flag mark-up. In order to solve this problem the correct way, I’ll probably have to shuffle the tool to look at all headers multiple times and create a dependency/attribute graph.

Ok, one more example, but I’ll warn you ahead of time that this goes a little past the edge of where I wanted to go with my metadata-creation tool.

class WackyTown

{

MyContainer< MyData* > cacheMisser;

MyContainer< MyData*, PoolAllocator<32768> > sendHelp;

};

| Cursor Text | Cursor Kind | Type Kind |

|---|

| Translation Unit | | |

| WackyTown | CXCursor_ClassDecl | CXType_Record |

| cacheMisser | CXCursor_FieldDecl | CXType_Unexposed(*) |

| MyContainer | CXCursor_TemplateRef | CXType_Invalid |

| MyData | CXCursor_TypeRef | CXType_Record |

| sendHelp | CXCursor_FieldDecl | CXType_Unexposed(*) |

| MyContainer | CXCursor_TemplateRef | CXType_Invalid |

| MyData | CXCursor_TypeRef | CXType_Record |

| PoolAllocator | CXCursor_TemplateRef | CXType_Invalid |

| (no-name) | CXCursor_IntegerLiteral | CXType_Int |

*

See the Canonical Type!

A quick confession: I started out using Clang’s C-interface, and I’ve attempted to write this article using C-langauge references. However, I actually did most of my experimentation using the Python bindings. They appear a little incomplete in comparison, so it’s possible that I simply missed the correct course on this one.

There is a lot of missing data in there! First, the types for cacheMisser and sendHelp are both listed as “Unexposed”. Second, the template-ref types are invalid with no obvious way to get to the template definition. Third, nowhere is it exposed to the AST that the MyType references are actually pointers. Fourth, there is no way to know what integer value was passed to PoolAllocator in either instance. Fourth, the implicit hierarchy of the template type arguments have been flattened. What a mess!

Experimentally, I found that I could get the canonical type of the “Unexposed” type for cacheMisser and then get the declaration of the canonical type. That gave me a new CXCursor_ClassDecl cursor which made a certain amount of sense if you think of templates as meta-types and template usages as real types. The new cursor looked like this:

| Cursor Text | Cursor Kind | Type Kind |

|---|

| MyContainer< MyData *, PoolAllocator< 1000 > > | CXCursor_ClassDecl | CXType_Record |

On the surface, it seems very promising, but there were no children of this cursor. So while it looks like all of our information is represented at the top level, there was no way to dig into it. Fortunately, I’m not very template-heavy in my engine project, but this is something I’m still trying to work through for completeness.

A Note on Performance

Running my little utility script on a single header takes about 5 seconds from start to finish. Much of that time is because I’m using a fairly heavy pre-compiled header in my regular engine builds, so building an otherwise trivial file actually touches several dozen core-systems files in addition to any deep rabbit holes caused by C-Runtime inclusions. I’ve mitigated this for the most part by using Clangs actual pre-compiled header functionality instead of just including my PCH as a normal header. As expected, this turns out to be a huge win when I’m running this across all my engine files. Much like ordinary PCH’s, I pay the 5-second penalty once and then each source file is virtually instantaneous.

That’s All, Folks!

I think that’ll just about do it for my engine metadata generator. While I hope someone out there can benefit from this little bit of yak-shaving for my on-going engine project, I’m sure this wasn’t a wholly original idea. I’d love to hear what others are doing in this vein using Clang or some other tools. Until next time – hopefully not in another year.